|

|

|

|

|

|

|

*equal contribution

|

|

|

|

|

|

|

|

|

|

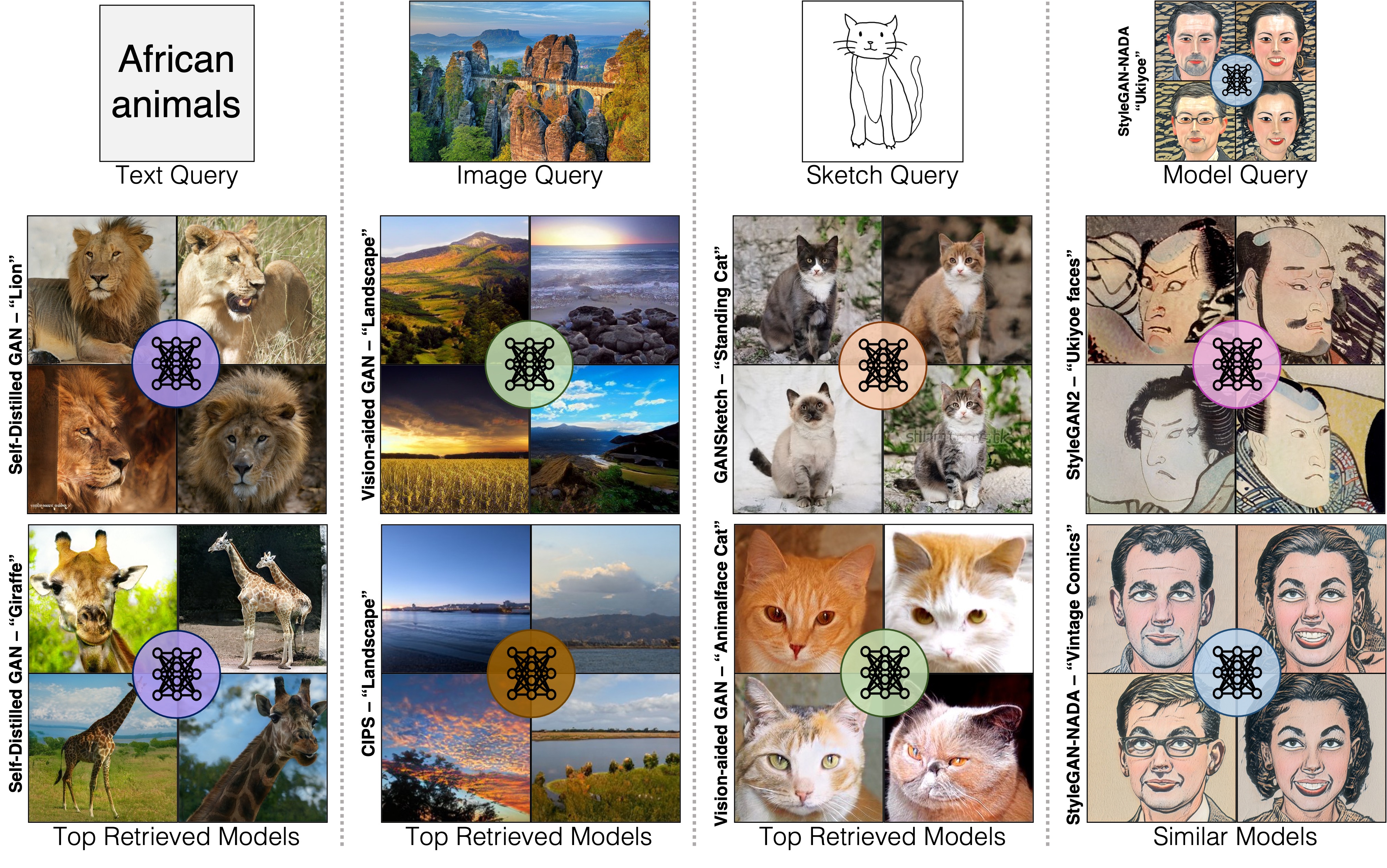

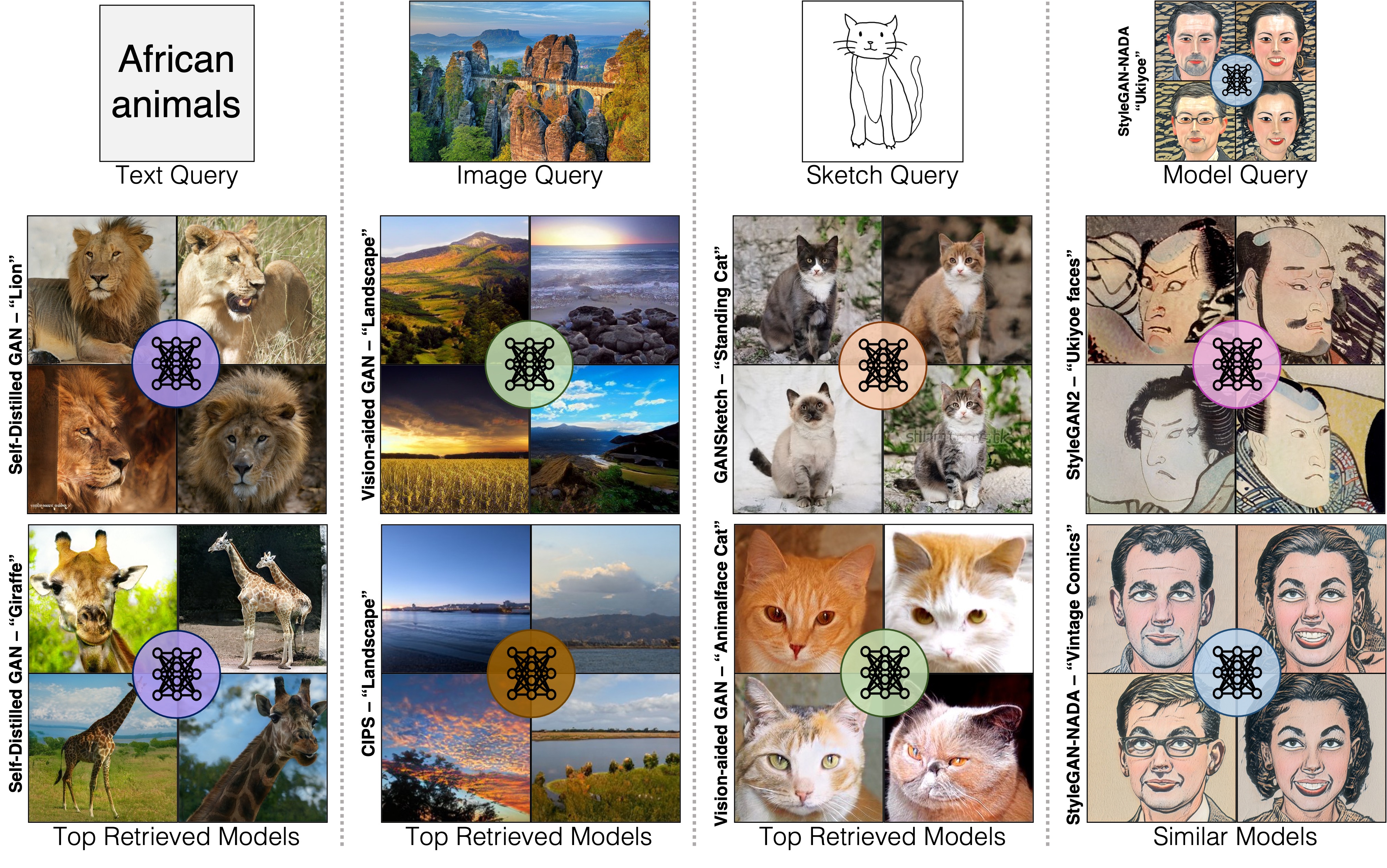

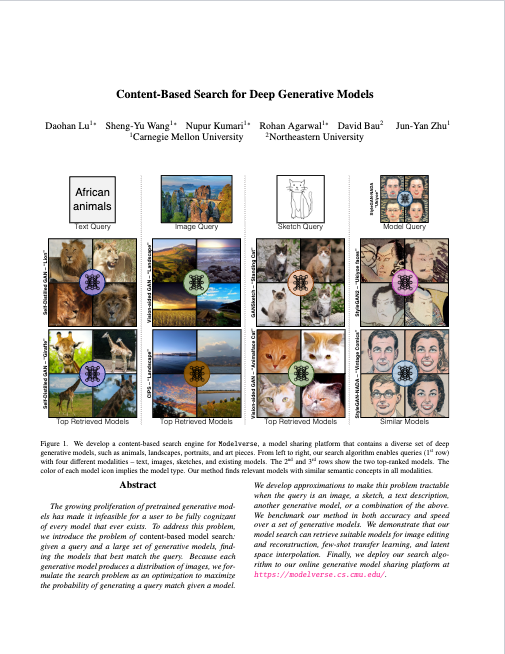

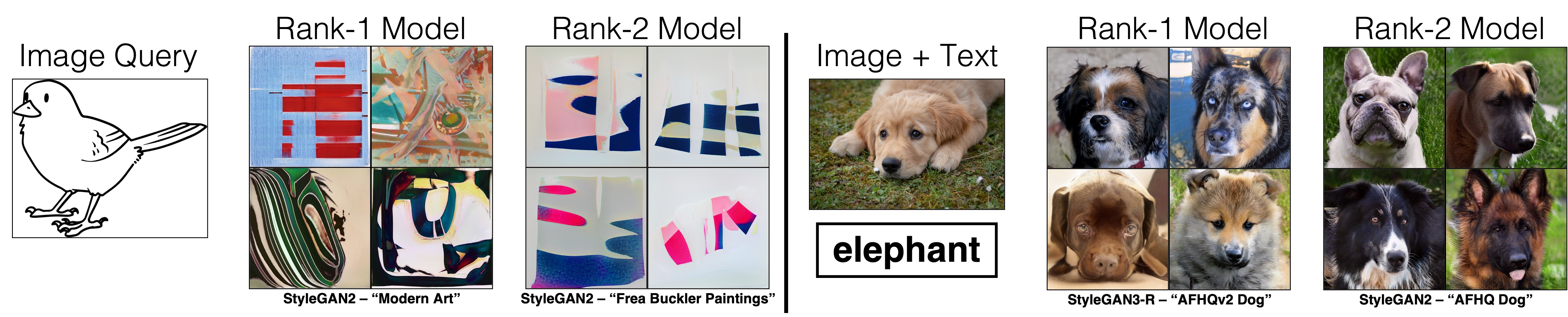

We develop a content-based search engine for Modelverse, a model sharing platform that contains a diverse set of deep generative models, such as animals, landscapes, portraits, and art pieces. From left to right, our search algorithm enables queries (1st row) with four different modalities – text, images, sketches, and existing models. The 2nd and 3rd rows show the two top-ranked models. The color of each model icon implies the model type. Our method finds relevant models with similar semantic concepts in all modalities.

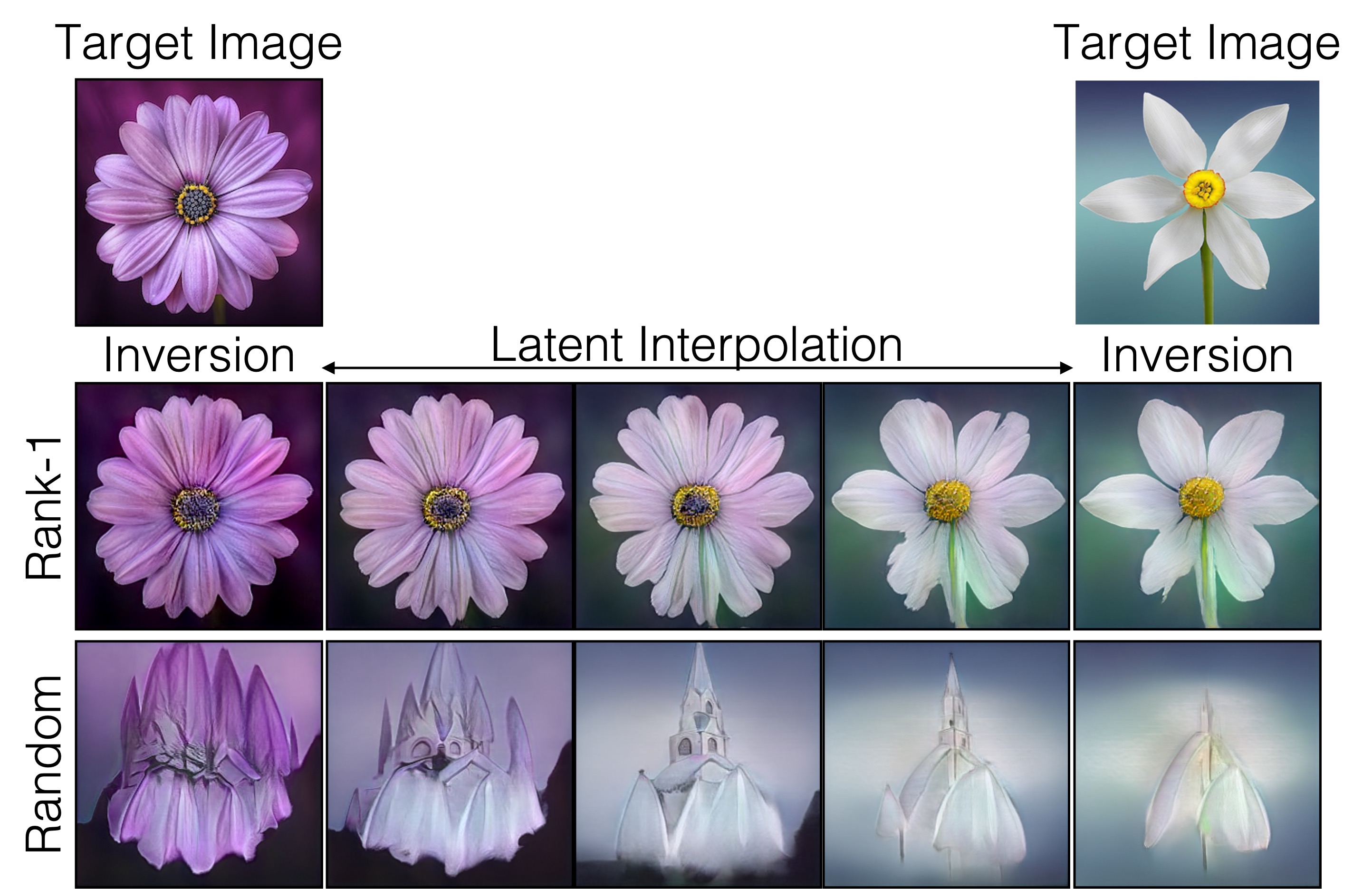

The growing proliferation of pretrained generative models has made it infeasible for a user to be fully cognizant of every model that exists. To address this problem, we introduce the problem of content-based model retrieval: given a query and a large set of generative models, finding the models that best match the query. Because each generative model produces a distribution of images, we formulate the search problem as an optimization to maximize the probability of generating a query match given a model. We develop approximations to make this problem tractable when the query is an image, a sketch, a text description, another generative model, or a combination of the above. We benchmark our methods in both accuracy and speed over a set of generative models. We further demonstrate that our model search can retrieve good models for image editing and reconstruction, few-shot transfer learning, and latent space interpolation.

[Click here to try out Modelverse!!]

|

Daohan Lu*, Sheng-Yu Wang*, Nupur Kumari*, Rohan Agarwal*, David Bau, Jun-Yan Zhu. Modelverse: Content-Based Search for Deep Generative Models. In arXiv, 2022. (Paper) |

|

|

Our search system consists of a pre-caching stage (a, b) and an inference stage (c). Given a collection of models, (a) we first generate 50K samples for each model. (b) We then encode the images into image features and compute the 1st and 2nd order feature statistics for each model. The statistics are cached in our system for efficiency. (c) At inference time, we support queries of different modalities (text, image, or sketch). We encode the query into a feature vector, and assess the similarity between the query feature and each model’s statistics. The models with the best similarity measures are retrieved.

Acknowledgements |